Every few months, a new model drops with a bigger context window and the same promise implied between the lines:

“This time, memory is basically solved.”

It isn’t. And more importantly, it won’t be. Not by the big AI labs.

This isn’t a technical argument. It’s an economic and structural one.

AI has a memory problem so large that solving it properly would cost hundreds of billions of dollars, and doing so would undermine the very business models of the companies best positioned to attempt it.

Let’s break down why.

The Boy That Cried Wolf

Every time a new model launches with a longer context window, a familiar claim resurfaces: “RAG is dead.” The implication is that retrieval is a temporary hack, useful until models can simply “remember everything.”

Yet teams actually building production AI systems see the opposite. While online discourse declares retrieval obsolete, engineering teams are doubling down on it. This disconnect exists because RAG didn’t fail, it is evolving fast.

In 2023, RAG meant basic vector search plus a prompt. In real systems today, RAG looks very different: multi-step retrieval, query decomposition, iterative refinement, access controls, and evaluation pipelines. These aren’t luxuries. They’re requirements for systems operating in the real world, especially as AI agents move from demos into production.

Agents don’t just generate text. They plan, execute, iterate, and interact with external systems. To do that reliably, they need accurate, current, and scoped data. Training data alone isn’t enough, models have cutoffs, can’t see private data, and hallucinate when uncertain. Retrieval becomes the mechanism that grounds intelligence in reality. For agents, memory isn’t optional. It’s the foundation.

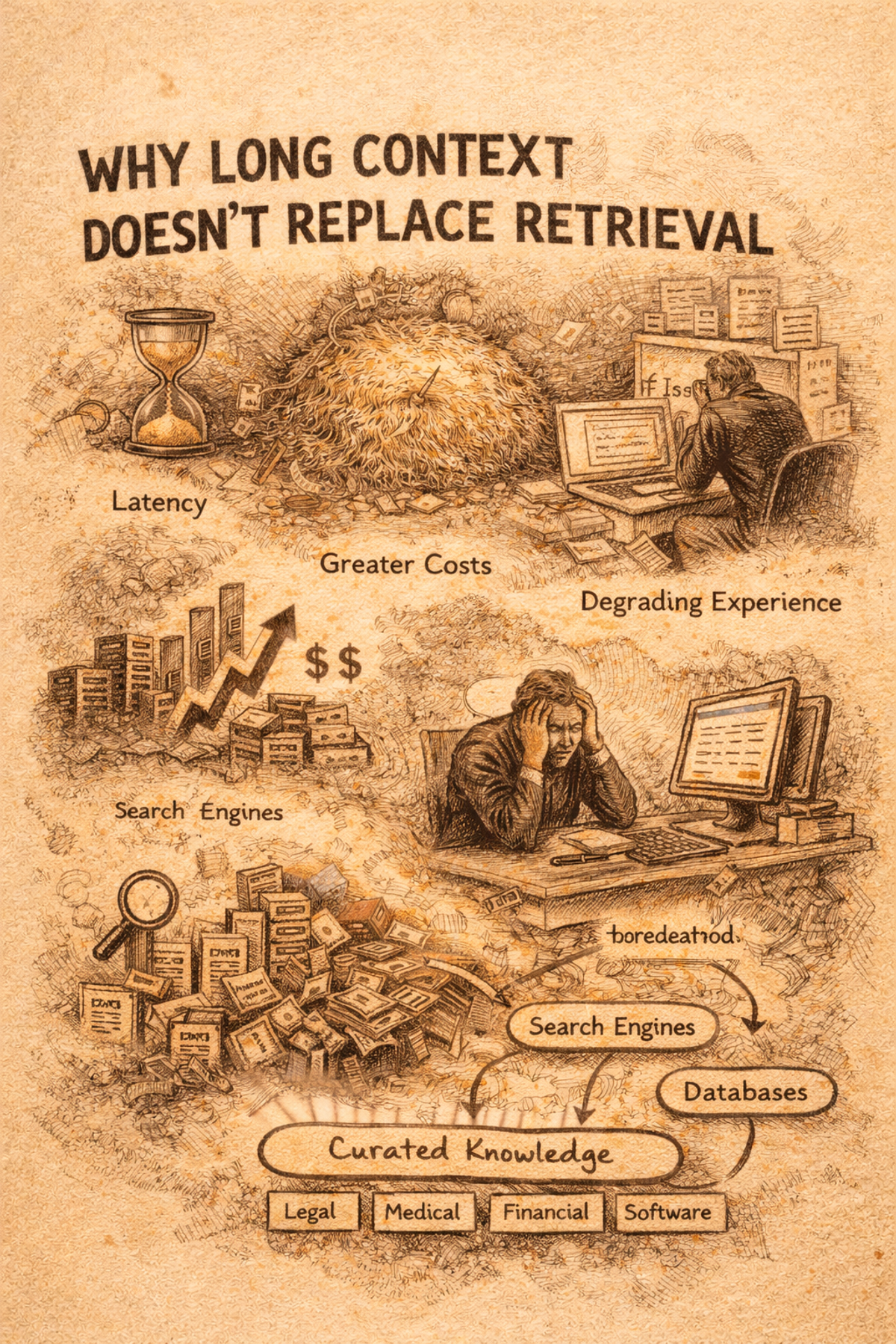

Why Long Context Doesn’t Replace Retrieval

This also explains why longer context windows don’t eliminate retrieval. If they did, we’d already be talking about the end of search engines and databases. Instead, every serious domain, finance, law, medicine, software, relies on curated, attributable knowledge bases. Bigger context doesn’t remove the need to find information efficiently.

Long context also introduces real, practical problems:

- Latency increases.

- Costs scale linearly with tokens.

- User experience degrades.

- Enterprise data grows faster than context windows ever will.

- Needle-in-haystack gets worse (important details get buried, irrelevant stuff pollutes reasoning).

The Context Window Reality No One Markets

And there’s another issue that rarely makes it into marketing copy of the frontier labs.

Huge context windows only look good on paper.

In practice, a so-called 1M token context doesn’t behave like 1M usable tokens. Long before you hit that number, the model starts to fall apart. It doesn’t just forget earlier parts, reasoning degrades, instructions blur together, and outputs become unstable. Anyone who’s actually pushed these limits knows the usable context is much closer to a fraction of the advertised size.

So no, bigger context isn’t “memory solved.” It’s mostly a bigger scratchpad with bigger failure modes.

The Trillion-Dollar Memory Problem

Which brings us to the part of the discussion that’s rarely framed honestly.

This isn’t primarily a technical problem. It’s an economic one.

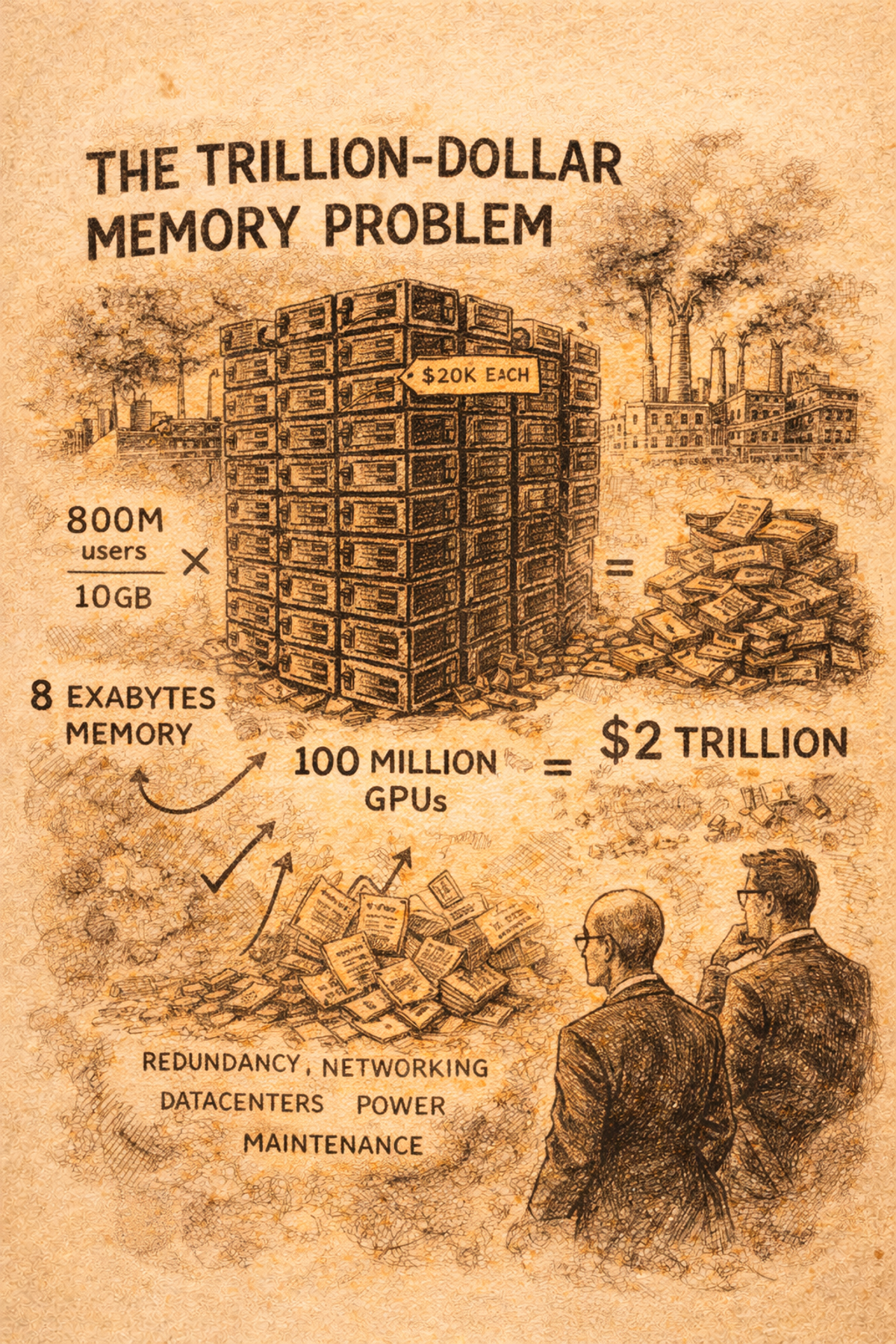

Let’s use a concrete example: ChatGPT has hundreds of millions of weekly active users, reaching around 800 million by late 2025 (and growing). Now imagine you want “real memory” not just storing text, but keeping enough working state that the model can reliably recall and use prior interactions without constantly reloading and reprocessing everything.

If you try to solve memory the “inside the model” way, by keeping a persistent working cache/state per user (the direction long context implicitly points toward) the costs get absurd fast.

Here’s a conservative back-of-the-envelope:

- Assume each active user needs the equivalent of ~10GB of high-speed working memory to support long-term conversational recall and reasoning (argue the exact number if you want, the order of magnitude is what matters).

- 800,000,000 users × 10GB = 8 billion GB, or 8 exabytes of high-performance memory.

- You can’t store this in cold storage and call it “memory.” If it’s meant to behave like native memory, it needs to live in GPU-class, high-bandwidth infrastructure.

- With ~80GB of usable memory per GPU, that’s: 8,000,000,000 GB ÷ 80GB ≈ 100 million GPUs.

- Even at an aggressively optimistic $20,000 per GPU, that’s: $2 trillion in GPUs alone.

That’s before redundancy, networking, datacenters, power, cooling, maintenance, or the fact that usage keeps growing.

This is why people who actually run the numbers land on the same conclusion: true AI memory at ChatGPT scale is a trillion-dollar problem, not a feature tweak.

The Portability Catch-22

This leads to another significant reason memory won’t be “solved” by the big labs.

Memory is the strongest moat in AI.

An assistant that remembers your history, preferences, workflows, and decisions is extremely hard to leave. Switching models means losing accumulated context and starting over.

Now imagine memory is solved properly: externalized, persistent, user-scoped, and usable across providers. In other words, portable memory.

That creates a catch-22:

- If big labs make memory portable, models become interchangeable. Intelligence becomes a commodity competing on price and latency. The moat disappears.

- If they don’t, memory stays shallow, fragmented, and half-broken, just good enough to feel magical, never good enough to make switching trivial.

From a business perspective, managed forgetfulness is the optimal equilibrium.

That’s why things look the way they do today. Context windows grow. Retrieval and memory are pushed onto developers. Limitations are framed as model constraints. Users adapt by re-explaining themselves and rebuilding state.

- The labs sell intelligence.

- Developers duct-tape together memory.

- Users accept the friction.

AI memory will be solved, but not inside the model, and not by the big labs in a way that makes it portable.

Because the moment memory becomes truly portable, so does their business.